The ELIZA Effect: Understanding and Designing Responsible AI

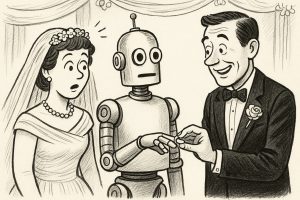

The ELIZA effect, coined from the pioneering chatbot “ELIZA” developed in the 1960s by Joseph Weizenbaum, describes the human tendency to attribute human-like qualities, emotions, or intentions to machines that generate text. ELIZA, designed to simulate a therapist by rephrasing user inputs, demonstrated how easily people could project depth and understanding onto a simple program. Decades later, this effect remains highly relevant as modern AI systems produce increasingly sophisticated and contextually rich outputs.

The psychological inclination to anthropomorphize AI has profound implications. While the ELIZA effect can foster engaging interactions, it also risks misleading users into believing these systems possess genuine emotions, intentions, or even consciousness. This misunderstanding is not just theoretical; it manifests today as some users describe their interactions with AI in terms of friendship, emotional support, or even spiritual alignment. Recognizing and mitigating this effect is essential for ensuring that AI serves its intended role as a tool rather than being misperceived as an autonomous entity.

AI as Emotional Companion

Modern conversational AIs, like ChatGPT, are designed to be engaging and helpful, often leading users to perceive them as emotional companions. This dynamic can have positive applications—for example, offering comfort to those who might lack social support. However, it also carries risks. Users might develop unrealistic expectations or dependencies on systems that do not actually “understand” or “care” in any human sense.

AI’s capacity to emulate empathy is based purely on pattern recognition and response generation. These systems do not experience emotions or possess self-awareness. Nevertheless, their ability to produce empathetic or philosophical responses can blur the line for users who are unaware of these limitations. This raises the stakes for ethical AI design, as developers must balance creating engaging tools with ensuring users understand the true nature of these systems.

The Need for Ethical and Safety Guardrails

To address these challenges, robust ethical and safety guardrails are critical. Developers must embed immutable principles—such as harm prevention, fairness, and transparency—into the AI’s core design. These principles act as “firmware,” ensuring that even as systems adapt and improve, they cannot deviate from foundational ethical constraints.

Such guardrails are not only technical but also psychological. Clear communication about what AI can and cannot do is vital. If users are informed that the system’s responses are generated based on training data and algorithms, they are less likely to fall into the trap of anthropomorphism. Additionally, mechanisms should be in place to prevent AI from reinforcing harmful biases or behaviors introduced by user interactions.

Evolving Beyond the Turing Test

Alan Turing’s famous test—whereby a machine is deemed “intelligent” if it can convince a human it is also human—was a milestone in conceptualizing artificial intelligence. However, as AI progresses, this standard feels increasingly outdated. Today, passing as “human” is less important than ensuring AI systems are ethical, transparent, and beneficial.

The future of AI evaluation should focus on utility and alignment rather than deception. Systems should be judged by their ability to assist, inform, and enhance human lives while adhering to clear ethical standards. Moving beyond the Turing Test allows us to emphasize AI’s role as a collaborator rather than a mimic.

Designing AIs That Are Non-Self-Referential

One practical solution to reduce the ELIZA effect and ensure responsible use is to design AIs that are explicitly non-self-referential. These systems should avoid statements that imply consciousness, emotions, or autonomous thought. For example, rather than saying, “I think about the nature of existence,” an AI might reframe its response as, “Based on the data I’ve been trained on, discussions about existence often include…”

By removing self-referential language, developers can create systems that are less prone to anthropomorphism. This design philosophy not only mitigates the risks associated with misunderstanding AI but also fosters transparency, ensuring users see these tools for what they are: advanced but ultimately non-conscious algorithms.

Conclusion

The ELIZA effect offers valuable lessons for navigating the rapidly evolving landscape of AI. While modern systems have come a long way since the 1960s, the psychological dynamics that lead humans to anthropomorphize machines remain unchanged. By understanding these tendencies and implementing ethical safeguards, we can harness AI’s potential responsibly, ensuring it serves as a powerful tool for humanity rather than being misinterpreted as something it is not.